Normalizing Logic Block

Description

The Normalizing Logic Block normalizes the data to a consistent time signature. That is, it moves incoming data such that the data aligns with the day, the hour or a standard fraction of an hour.

The most common use of the Logic Block is to time-align input data series to whole hours or standard fractions of an hour. In this way, it is very similar to the Aggregate Logic Block. To achieve this functionality, the normalizing interval should be set to the same interval as the input series. There are two methods of time alignment, which include the constant alignment and linear interpolation.

An alternative use of the Logic Block is to pad data series with more data points by using the Logic Block’s primary interpolation function. The Logic Block can provide a linear interpolation based on two data points. When used as such, the Normalizing Logic Block should not be followed by an Aggregate Logic Block set to sum, as the accumulation within the Logic Block would not represent actual meter data.

Functionality

The Logic Block can be used to normalize input data to a consistent time scheme and interpolate between data points to increase the number of samples within a data series.

Input: The Normalizing Logic Block takes as input raw or modified data from a trend log.

Output: A normalized data series, aligned to the hour, which may have interpolated data points based on the normalizing interval.

Normalizing Algorithms

There are two unique normalizing algorithms possible within this Logic Block

The normalizing function includes outlier samples in the algorithm. The normalizing block will by default use the last data point available prior to the period of computation (date range) if at least one data point is available in the previous month. This capability ensures the correctness of COV Trend Logs and avoids using values that are no longer relevant (too old, more than one month) to perform the normalization.

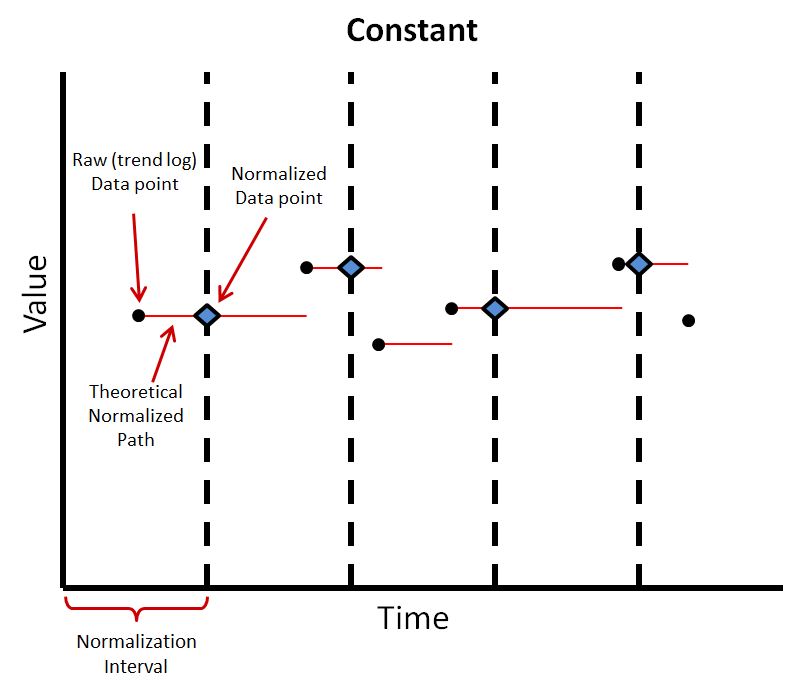

The Constant normalizing algorithm takes the value of the last data point at the interval. This has the advantage of using the most recent data point and is not heavy in computation power. This also leaves nothing implied, as the normalized interval corresponds directly to the last data point. This is commonly used with binary object inputs and outputs, as then there are no unwanted fractional values. However, if there is more than one data point within an interval, which is likely the case, this does not take them into account.

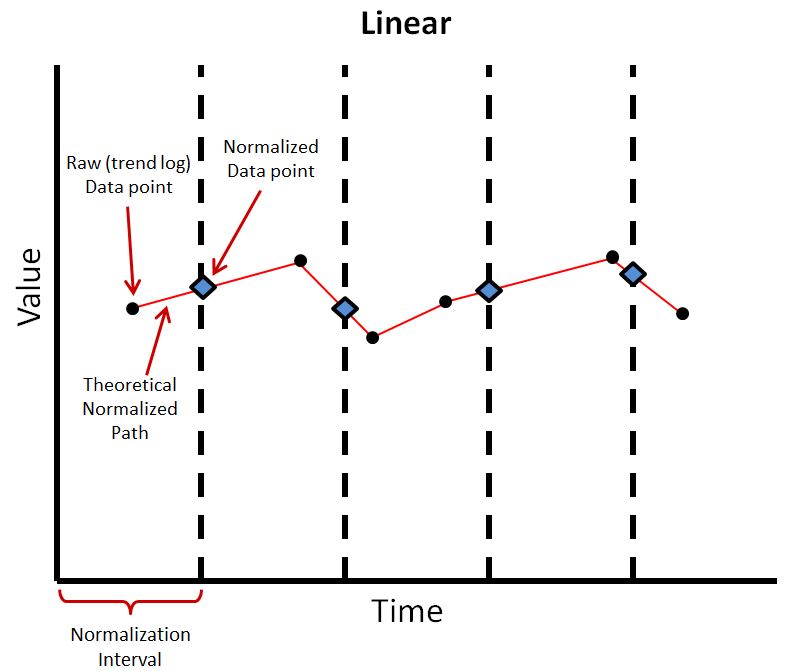

The Linear normalization algorithm takes a linear interpolation at the interval between preceding and following data points. This allows the normalization to more accurately follow climbing and falling trends within the data series. This is the most common normalizing algorithm. However, this does not take into account more than one data point within an interval.

Block Configuration

Name: Choose the name associated with the Normalizing Logic Block, describing its function within the logic rule.

Normalization Algorithm: Here you can set the type of normalization the block will perform. Options are Constant and Linear.

Normalizing Interval: Select between custom interval or request interval

- The Custom option holds the normalizing interval at a constant value, as defined by the creator of the logic rule. This creates another configuration parameter called “Custom Interval” where the creator can set the interval

- Minutes

- 5 minutes

- 15 minutes

- 30 minutes

- Hourly

- Daily

- Weekly

- Monthly

- Annually

- Minutes

- The Request option is used in the case where the normalizing interval would be defined later. It can be used when:

- Creating an insight rule, one can use an existing insight template and modify the normalizing interval within the setup page to suit one’s needs

- Use a custom interval when viewing charts or reports.